One case is when the MCDRAM cache is not able to hold the accessed working set. There are a few scenarios where enabling the cache could reduce performance.

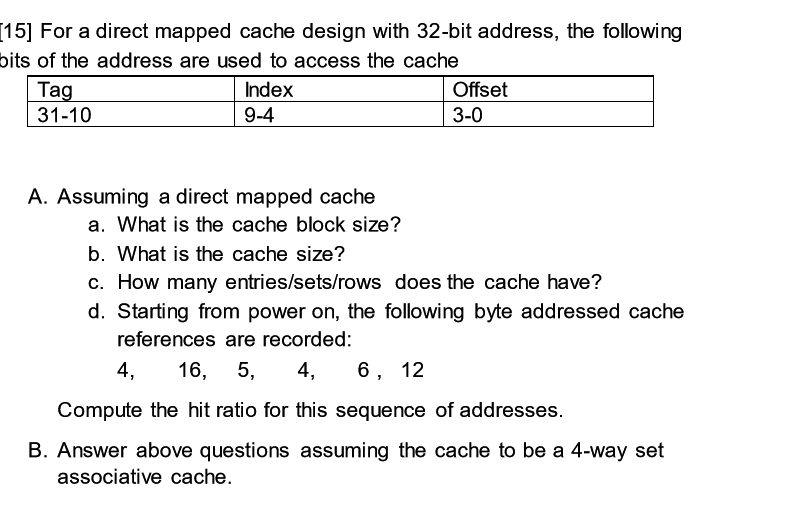

#DIRECT MAPPED CACHE TAG INDEX OFFSET CODE#

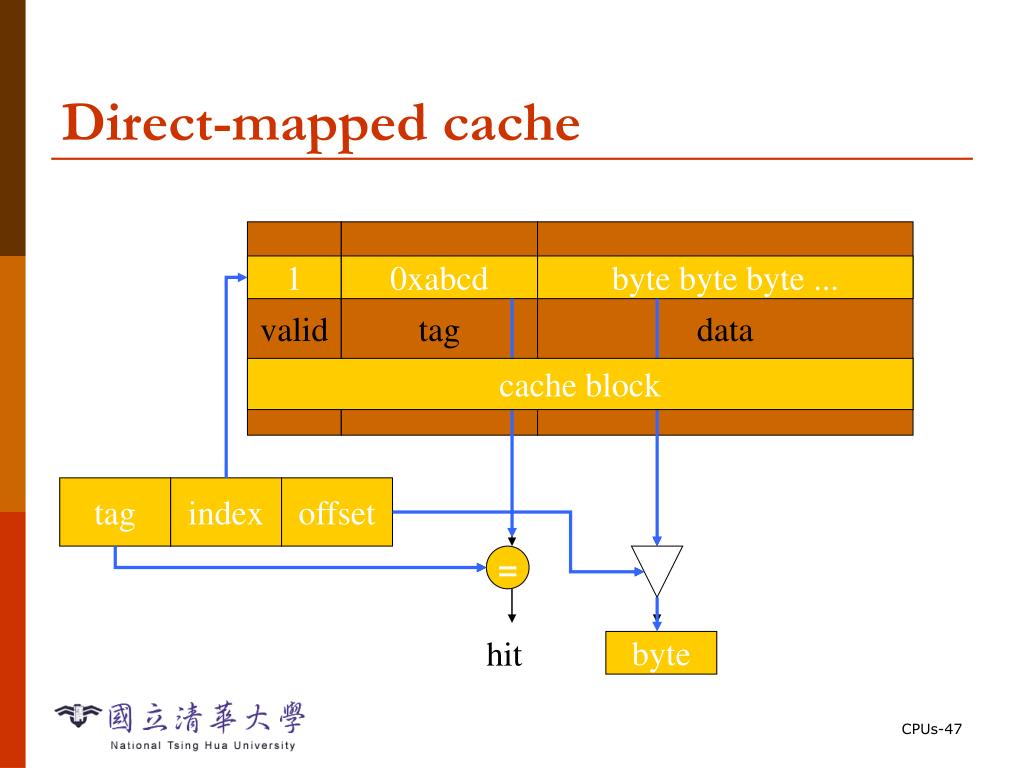

Because of the simplicity of this -no source code changes, and the large possible performance benefits, moving from DDR only to MCDRAM cache mode should be one of the first performance optimizations to try. Some applications that heavily utilize a few GB of memory could see performance improvements of up to 4 ×. Because of this, a simple first optimization for a program is to turn on the MCDRAM cache. This means that multiple memory locations map to a single place in the cache. When MCDRAM is placed in cache mode, it is a direct mapped cache. As a memory side cache, it can automatically cache recently used data and provide much higher bandwidth than what DDR memory can achieve. The MCDRAM cache is a convenient way to increase memory bandwidth. Avinash Sodani, in Intel Xeon Phi Processor High Performance Programming (Second Edition), 2016 Direct Mapped MCDRAM Cache Nevertheless, most real programs benefit from larger block sizes. If the adjacent words in the block are not accessed later, the effort of fetching them is wasted. The time required to load the missing block into the cache is called the miss penalty. Moreover, it takes more time to fetch the missing cache block after a miss, because more than one data word is fetched from main memory. This may lead to more conflicts, increasing the miss rate. However, a large block size means that a fixed-size cache will have fewer blocks. Therefore, subsequent accesses are more likely to hit because of spatial locality. The advantage of a block size greater than one is that when a miss occurs and the word is fetched into the cache, the adjacent words in the block are also fetched. To exploit spatial locality, a cache uses larger blocks to hold several consecutive words. The previous examples were able to take advantage only of temporal locality, because the block size was one word. Harris, David Money Harris, in Digital Design and Computer Architecture, 2016 Block Size

0 kommentar(er)

0 kommentar(er)